|

Welcome to AI meets Politics at Exeter!

This website presents the AI related research I am currently leading. I will post results and papers on this website once they are accepted for publication. The key projects include

|

|

The Politics of AI

Who sees AI as a threat and who sees AI as an opportunity? What is the role of labor market skills for the way citizens form attitudes towards AI? And how does AI affect political conflict and existing cleavages in Europe? AI involves a profound transformation of our everyday lives. Some of these changes can be perceived or turn out as a disruptive force: people might worry about losing their jobs as a result of an increased usage of AI in their sector or about downward pressure on their wages. In this project, my coauthor and I develop a theoretical model to explain the conditions under which citizens see AI as a threat in Europe, we test this model empirically, and we aim to contribute to a discussion on policies addressing voters’ concerns. The goal of the project is also to show how AI affects existing cleavages (see Manschein 2023 for a related piece) and to point to new challenges for democracies. |

Gender, Biases and AI

As AI is becoming more prevalent in everyday lives, it is important to consider that current applications are not necessarily unbiased. This is not surprising: the training data that programs such as ChatGPT rely on include stereotypes and hence the output is biased, too; also, the fact that sectors that drive the development of AI are often male-dominated might add to this problem (Parsheera 2018).

The first project examines gender differences in the way citizens perceive AI. Astoundingly, some research (e.g. Budeanau et al. 2023; using Eurobarometer data from 2021) does not register gender differences. However, there are reasons to expect gender differences in perceptions of AI (and in fact, intersectionality is likely to affect views, too). In my work with data for the UK, I find substantially and statistically significant gender differences: for instance, women feel less confident about their understanding of what AI is and they are less optimistic about its impact (for related findings, see Borwein et al. 2023). The findings on subjective understanding resonate with a well-known pattern: at a given level of objective knowledge, men are more likely to report higher subjective confidence in their knowledge than women (Lundeberg et al. 1994). Thus, there might be no objective differences in understanding, but the differences still matter because self-reported understanding is linked to feeling threatened by AI. This project will delve deeper into the way gender shapes perceptions of AI.

As AI is becoming more prevalent in everyday lives, it is important to consider that current applications are not necessarily unbiased. This is not surprising: the training data that programs such as ChatGPT rely on include stereotypes and hence the output is biased, too; also, the fact that sectors that drive the development of AI are often male-dominated might add to this problem (Parsheera 2018).

The first project examines gender differences in the way citizens perceive AI. Astoundingly, some research (e.g. Budeanau et al. 2023; using Eurobarometer data from 2021) does not register gender differences. However, there are reasons to expect gender differences in perceptions of AI (and in fact, intersectionality is likely to affect views, too). In my work with data for the UK, I find substantially and statistically significant gender differences: for instance, women feel less confident about their understanding of what AI is and they are less optimistic about its impact (for related findings, see Borwein et al. 2023). The findings on subjective understanding resonate with a well-known pattern: at a given level of objective knowledge, men are more likely to report higher subjective confidence in their knowledge than women (Lundeberg et al. 1994). Thus, there might be no objective differences in understanding, but the differences still matter because self-reported understanding is linked to feeling threatened by AI. This project will delve deeper into the way gender shapes perceptions of AI.

|

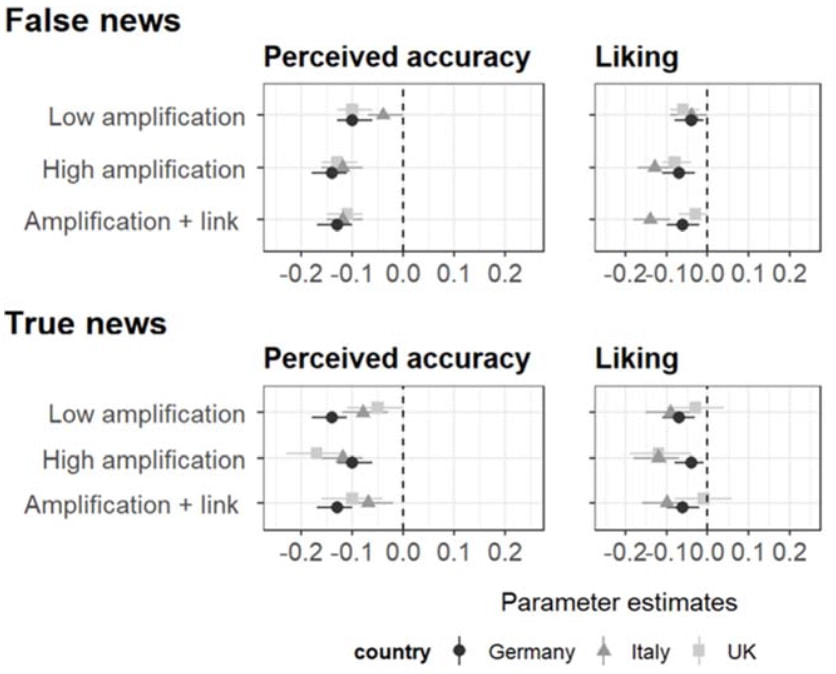

What can we do

against misinformation on social media? Our main research question is to find out whether people perceive fake news as less accurate when other people already posted social corrections, e.g. comments that identify a post or information as incorrect. Our initial results show that social corrections help other people when they see factually incorrect social media content: factually incorrect posts with comments from other user that identify the incorrect content are perceived as less accurate than factually incorrect posts that have not been corrected. This seems good news: fact checking content and leaving comments can make a difference! However, what happens in case a comment denotes correct information as flawed? That is what we analysed in the second part of our field work. Our initial results show that skeptic comments can make people less likely to believe real news. Thus, while comments from other people can be helpful in the context of incorrect information, comments from other people can be harmful in the context of correct information: when others cast doubt, people are less likely to believe social media content even when it is correct. |

City council, Berlin Treptow-Köpenick. Photo source: Assenmacher, Wikipedia (License: CC BY-SA 3.0)

|

The Role of AI in the Provision of Public Services

There is a vast potential for AI when it comes to the provision of public services, which is likely to transform how citizens interact with the state in their daily lives (Misuraca et al. 2020, Henman 2020m, Gesk and Leyer 2022). The work that I plan in this line of research focuses specifically on Germany, which is a distinct case: that is for instance because privacy concerns of German citizens differ from those in other European countries and in particular from those in the US and UK (e.g. in the context of the German COVID-19 contact tracking app, concerns over data privacy led to unique challenges). Put simply, AI applications that can be implemented in other countries might stir opposition in Germany. |

|

AI and University Education How does AI change how we learn and teach in universities? What should teaching be about given the availability of AI and resources such as ChatGPT? How can university classes in general and those in political science in particular prepare students for a job market that asks students to be proficient users of AI? These are questions I am currently discussing with my students and colleagues in order to contribute answers and solutions that help all of us in the uni education sector. |

Picture above was created with ChatGPT's DALL-E. Please note the high energy consumption of the image generation; more info is here.

|

Links to inspiring resources related to Politics and AI:

Introductions for beginners:

# 1 Introduction to AI, free online course of the University of Helsinki

# 2 Financial Times, Video: "AI a blessing or a curse for humanity?"

# 3 Sustainability and AI: Making an image with generative AI uses as much energy as charging your phone (MIT Review, Dec1, 2023)

# 1 Introduction to AI, free online course of the University of Helsinki

# 2 Financial Times, Video: "AI a blessing or a curse for humanity?"

# 3 Sustainability and AI: Making an image with generative AI uses as much energy as charging your phone (MIT Review, Dec1, 2023)

Research Groups & Programmes:

# 1 Digitalization and Democracy. Aspen Institute

# 2 KI-Mensch-Gesellschaft: Institut für Sozialwissenschaftliche Forschung e.V.

# 3 Oxford University, Research Programme on AI, Government and Policy

# 4 WZB: Consequences of AI for democracy and political participation

# 5 DVPW (Deutsche Vereinigung für Politikwissenschaft) Arbeitskreis Digitalisierung und Politik

# 6 Humboldt-Institut für Internet und Gesellschaft (HIIG) Forschungsgruppe "Public Interest AI"

# 7 Centre for the Governance of AI

# 8 The Governance and Responsible AI Lab (GRAIL) at Purdue University

# 1 Digitalization and Democracy. Aspen Institute

# 2 KI-Mensch-Gesellschaft: Institut für Sozialwissenschaftliche Forschung e.V.

# 3 Oxford University, Research Programme on AI, Government and Policy

# 4 WZB: Consequences of AI for democracy and political participation

# 5 DVPW (Deutsche Vereinigung für Politikwissenschaft) Arbeitskreis Digitalisierung und Politik

# 6 Humboldt-Institut für Internet und Gesellschaft (HIIG) Forschungsgruppe "Public Interest AI"

# 7 Centre for the Governance of AI

# 8 The Governance and Responsible AI Lab (GRAIL) at Purdue University

Research & Discussion:

# 1 Large Language Models in Political Science (Review article in Frontiers in Political Science (Vol. 5, 2023)

# 2 Sustainability and AI: Making an image with generative AI uses as much energy as charging your phone (MIT Review, Dec1, 2023)

# 3 Institutional factors driving citizen perceptions of AI in government: Evidence from a survey experiment on policing

# 4 Article "In AI we trust? Perceptions about automated decision-making by artificial intelligence"

# 5 Article (based on a conjoint experiment): "Public preferences for governing AI technology: Comparative evidence"

#6 Article on biased public admin decisions: "Bureaucrat or artificial intelligence: people’s preferences and perceptions of government service"

# 1 Large Language Models in Political Science (Review article in Frontiers in Political Science (Vol. 5, 2023)

# 2 Sustainability and AI: Making an image with generative AI uses as much energy as charging your phone (MIT Review, Dec1, 2023)

# 3 Institutional factors driving citizen perceptions of AI in government: Evidence from a survey experiment on policing

# 4 Article "In AI we trust? Perceptions about automated decision-making by artificial intelligence"

# 5 Article (based on a conjoint experiment): "Public preferences for governing AI technology: Comparative evidence"

#6 Article on biased public admin decisions: "Bureaucrat or artificial intelligence: people’s preferences and perceptions of government service"